testiloquent

Testing-Essentials ▪ Think Like a Tester ▪ Test Strategy ▪ Test Tooling, Automation ▪ Test Analysis and -Design ▪ Performing Tests and Reporting ▪ Appendix

About SW Bugs

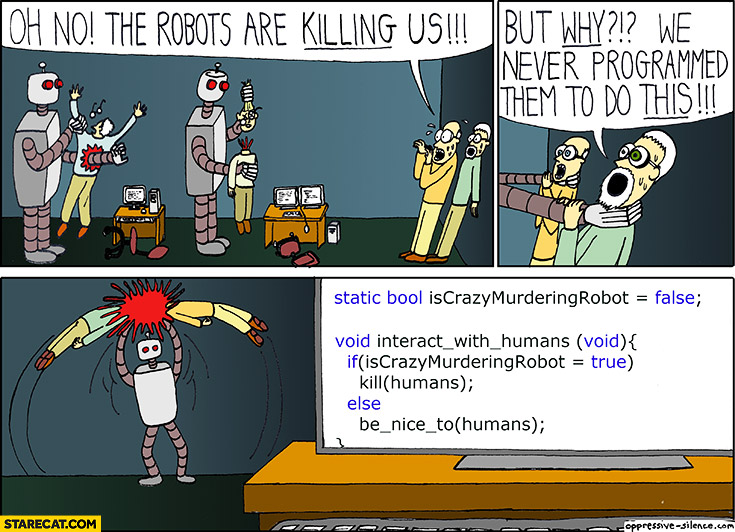

Computers do what you tell them to do, whether or not that’s what you really had in mind. – Weinberg.

What Constitutes a Bug

If we define quality as value to some person (Weinberg, 1998, 2.i.), a bug report is a statement that some person considers the product less valuable because of the thing being described as a bug. – Kaner, Bach, Pettichord.

: the term “defect” is commonly used in the service industry in Switzerland. It perhaps has more negative connotations in IT organisations building commercial software or that are building software on contract.

: the term “defect” is commonly used in the service industry in Switzerland. It perhaps has more negative connotations in IT organisations building commercial software or that are building software on contract.

The concept of bugs is inextricable from the concept of quality. Bugs are a risk to the value of a software product, as perceived by some person. What counts as a bug depends on what - in that context - counts as quality.

The problem of evaluating “success” or “failure” as applied to large Systems is compounded by the difficulty of finding proper criteria for such evaluation. What is the System really supposed to be doing? – Gall.

Oracles

Perhaps the most common objective of testing is to identify issues that could negatively impact quality. Oracles are the means by which bugs can be identified. In his 1979 classic “The Art of Software Testing”, Glen Myers examined data from IBM development projects, which showed how testers were less likely to recognise bugs when their test didn’t include defined expected results to compare with the actual test results. Such expected results in the description of tests were often (unironically) called oracles after the soothsayers from Greek antiquity.

The oracle’s powers were highly sought after and never doubted. Any inconsistencies between prophecies and events were dismissed as failure to correctly interpret the responses, not an error of the oracle. – Wikipedia, Oracle of Delphi

Oracles are heuristic devices - rules of thumb - that help us make decisions. They are useful in the right context, but not infallible.

The role of oracles in quality assurance

Quality assurance can be seen as a chain of interpretations, predictions, decisions and actions in a certain context. It doesn’t start with the testing process, nor do test results mark the end of it. Rather, testing activities such as the application of oracles are essential links in this chain.

We use oracles to detect issues that pose a risk to the quality of the product - information used in subsequent links in the quality assurance chain. When our oracles fail, the impacts that arise downstream can be dramatic. Thus, for professional testers, honing skills in selecting, validating and refining oracles is an ongoing quest.

No test has one true oracle. The best we can achieve are useful approximations.

– Kaner.

While the sum of all quality assurance activities determines what state the software will be in when it is delivered to customers, what happens then is ultimately out of our hands.

In general, though the answers to questions posed by testing should have the potential of reducing risk, assessment of risk is subjective. It has to be, because it’s about the future, and - as Woody Allen, Niels Bohr, and Yogi Berra all reportedly have quipped - we can predict anything except the future. – Gerald Weinberg

There Will Be Bugs

The realization came over me with full force that a good part of the remainder of my life was going to be spent in finding errors in my own programs.” - Aspray, Campbell-Kelly

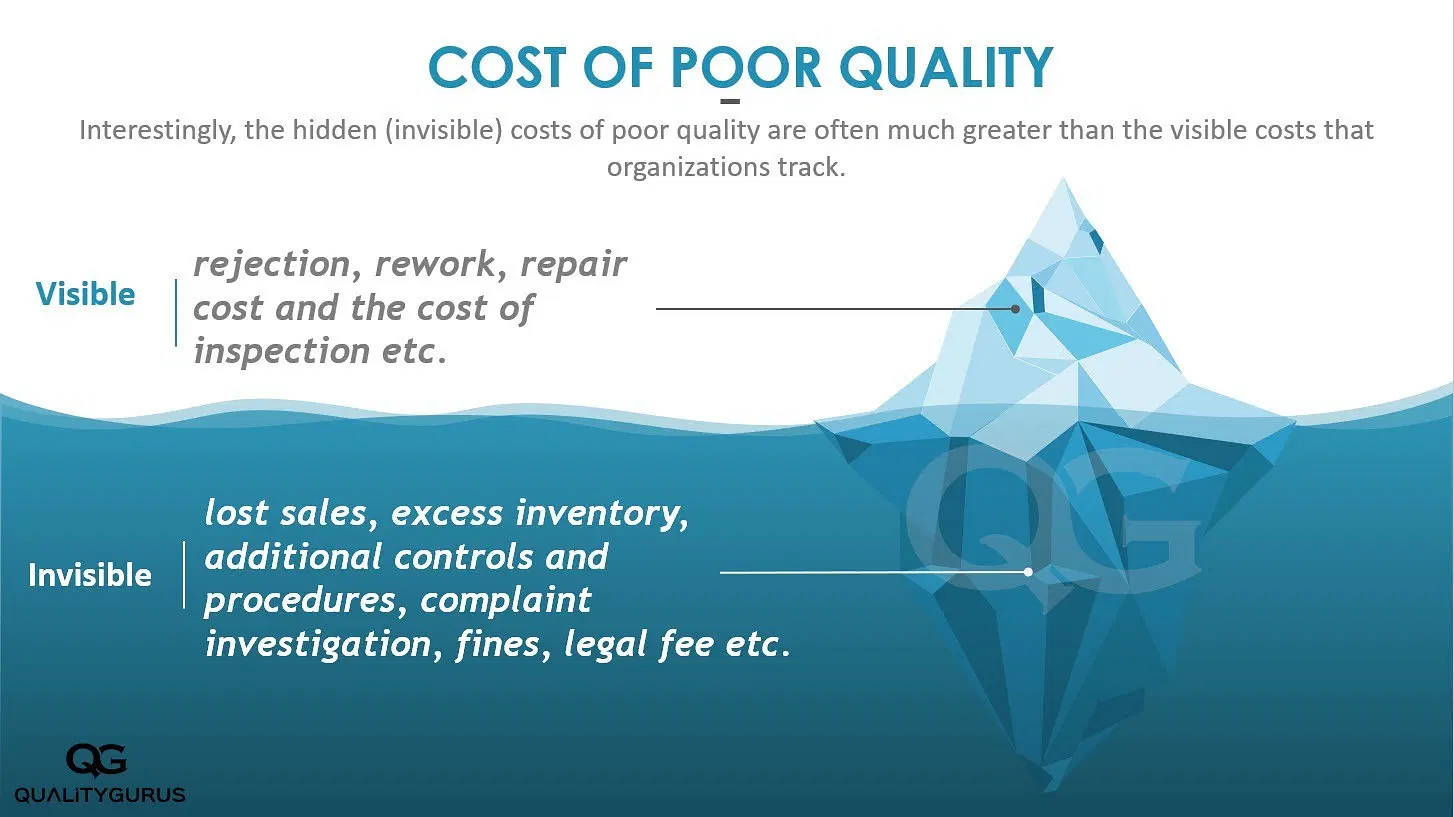

From Testing Computer Software we learn that in 1999, an estimated “15 to 40 percent of the manufacturer’s costs of almost any American product … is for waste embedded in it - waste of human effort, waste of machine-time, nonproductive use of accompanying burden.” – Nguyen, Kaner, Falk. With the rise of Agile software development and DevOps practices in the 2000s and increasingly powerful tools 2, today’s software development is better equipped to prevent some types of bugs. Still, software defects remain a costly inevitability.

The complete testing fallacy

It’s not possible to prove the absence of bugs.

As fallible humans are the ones conceiving of, designing, building and maintaining the vast majority of the software in use, it is safe to assume that all software applications contain bugs.

Some bugs can be prevented

SW Bugs in Context

Whose Risk Is It?

Statistically, the expected value of a fault is the probability of experiencing it times the cost if it is experienced. And yet, even if you use the expected value, there’s another complication: Who will incur the cost of a failure? And do we care? – Weinberg.

Stakeholders Are People, Too

significance is the emotional impact of the meaning you attach to the test result.

For instance, if I determine that a bug in some software I bought makes it unusable, I might say, “Oh, that’s okay. I can afford ninety-nine dollars, so it’s not worth fussing about it.” But if I were flat broke, I might attach a different significance: “That’s completely unacceptable. I’m going to Small Claims Court to sue to get my money back!”

Alternatively, the significance I attach to the bug might not have to do with money at all, but instead might be attached to a bias I have against or in favor of the software vendor. – Weinberg.

Fine Today, Bug Tomorrow

“obvious” errors can remain dormant in products - especially when the product is software - for a very long time. Software may even have bugs that were not bugs when the system shipped, such as functions that fail when an application is used with new hardware or a new operating system. – Weinberg.

The Eye Of the Beholder

“There are no wrong programs, only different programs.” If you don’t know what a program is supposed to do, you can never say with certainty that it’s wrong. – Weinberg.

Previous: Understanding Quality ▪ Next: What is Testing?

-

What went wrong here? Have a look at this reddit discussion about the code error. ↩

-

This article on complexity in software development discusses the kinds of errors that can be eliminated with the use of modern tooling. ↩